Education

“True Perspective” imaging of in vivo deep tissue by AI method achieved

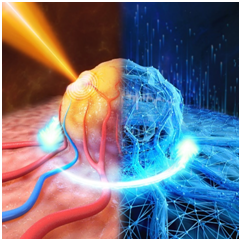

Functional graphic of deep learning-based QOAT method

A research team at Tianjin University (TJU) has achieved the first “True Perspective” imaging of the optical function of in vivo deep tissue using a quantitative optoacoustic method based on deep learning. Their results were published in Optica, a top international optical journal, on January 6, 2022. Associate Professor Jiao Li is the first author and Associate Professor Biao Sun and Professor Feng Gao from TJU and Professor Vasilis Ntziachristos from the Technical University of Munich are the co-corresponding authors of the paper.

This method will provide a high spatial resolution quantitative imaging strategy for obtaining images of blood oxygen characteristics related to the physiology and pathology of living tissues. It also can be used for early detection of tumors, benign and malignant tumors diagnosis, and in vivo monitoring and quantitative anticancer drug efficacy evaluation.

Quantitative optoacoustic tomography (QOAT), which combines the functionality of traditional optical imaging and the high resolution of traditional ultrasound imaging, is a new non-invasive biomedical imaging technology. QOAT can directly obtain optical absorption coefficient images of deep tissue and has drawn attention from research institutions and medical enterprises around the world.

However, light intensity gradually attenuates during propagation in tissue. Optoacoustic imaging cannot truly reflect the optical absorption coefficient of tissue due to light intensity attenuation in deep tissue, negatively affecting the accuracy and reliability of deep tissue imaging. The disadvantages of presenting QOAT include huge consumption of computational resources, lengthy imaging times, poor stability, heavy dependence on prior information, and large margins of error.

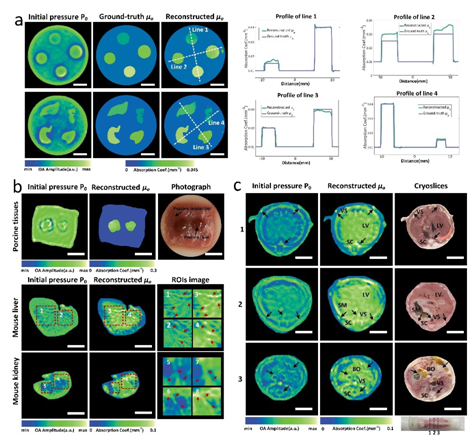

Comparison of traditional optoacoustic reconstruction (P0) images and QOAT reconstruction (μa) images

In recent years, the successful application of deep learning in the medical imaging field has demonstrated its potential in biomedical optical imaging. Deep learning generally involves two processes: a training process and the actual testing process. To utilize the full learning ability of the deep neural network, a large amount of paired training data with labels were required for the training process.

However, in many biomedical imaging methods, it is difficult to obtain the true values of deep tissue, especially those of living tissue. Therefore, it is difficult to construct a large amount of paired training data with labeled experimental data for deep neural network training, resulting in limitations in the application and promotion of deep learning methods in the biomedical imaging field.

Jiao, Feng, and their team from the School of Precision Instrument and Opto-Electronics Engineering of TJU proposed a deep learning-based QOAT method to solve these problems. It can accurately reconstruct the absorption coefficient of deep tissues without real labeled experimental data for the first time.

One of the innovative points of this method is that it can solve the problem of limited training data for neural network training. Usage of a style transfer network - the Simulation-to-Experiment End-to-end Data translation network (SEED-Net) - realized the unsupervised conversion of simulation data and experimental data, converting data rich with labeled simulation to the experiment domain and generating large amounts of labeled "experimental data" for subsequent neural network training.

“The SEED-Net we proposed not only solves the lack of real labeled experimental data problems in the QOAT field, but we can also use it to generate labeled ’experimental data‘ from simulation data in other biomedical imaging areas such as diffuse optical/fluorescence tomography, which is also limited by the lack of adequate real labeled experimental data, to further develop deep learning methods for biomedical imaging suitable for practical applications. This method is universally applicable to different optoacoustic imaging systems, other optical imaging techniques, and the entire biomedical imaging field,” Jiao said. “It also solves the generalization problem of deep learning methods to some extent,” Biao said.

Another innovation is the team’s creation of a dual-path neural network that combines actual optoacoustic and mathematical models, which takes the influence of light intensity distribution and optical absorption coefficient of tissue on the initial pressure image into consideration. “Usually, network models developed in other fields are directly used to solve problems in the current deep learning-based optoacoustic field. How to improve neural networks to be closer to the mathematical models of optoacoustic or other imaging technologies will be one of the important problems for deep learning in biomedical imaging,” Jiao added.

Using the optoacoustic tomography system independently developed by the team to obtain the test data, the developed deep learning-based QOAT method successfully reconstructed the quantitative distribution image of the optical absorption coefficient of deep tissue with high spatial resolution.

This is the first time that deep learning-based QOAT methods have been used to achieve “True Perspective” imaging of the optical absorption coefficient of in vivo deep tissue. The successful application of neural networks without real labeled experimental data also expands the development space of deep learning-based methods in biomedical imaging.

Copyright 1995 - . All rights reserved. The content (including but not limited to text, photo, multimedia information, etc) published in this site belongs to China Daily Information Co (CDIC). Without written authorization from CDIC, such content shall not be republished or used in any form. Note: Browsers with 1024*768 or higher resolution are suggested for this site.

Registration Number: 130349